INC Beta Feature

This feature is in BETA status. Functionality, design, and scope may change significantly over time.

🤔Why should I use transcription? Transcribing customer calls improves quality assurance and increases the efficiency of call processing, for example making it easier to summarize past customer interactions and possibly transfer them to a CRM system for easier search and later analysis.

INC Transcription Preconditions

PRECONDITIONS

- Enterprise Contact Center The service needs to have an Enterprise or Contact Center license assigned in order to use the Transcription feature.

- Your tenant needs to have available Transcription licenses in Licenses Tenant Settings.

- As an Administrator, you need to set up the speech service in Azure and note down the API key. The API key needs to be added in the Nimbus settings.

💡Note that the usage of the Transcription feature will cause additional monthly ACS costs. - As an Administrator/Team Owner you need to enable Transcription in Service Settings > Virtual Assistants and add it as widget via Extension Service Settings.

- Each user that wants to use the Live Caption/Transcription feature needs to have a Transcription license applied. This is done by an administrator in General User Settings.

INC Azure Billing Transcription

AZURE BILLING

The usage of the Transcription feature will cause additional monthly ACS costs. The costs are determined by Microsoft. Also see https://azure.microsoft.com/en-us/pricing/details/cognitive-services/speech-services/.

- Before enabling the Transcription feature, get in touch with your Luware Customer Success specialist to discuss terms, usage scenarios and necessary setup procedures.

- Please note that Nimbus and Transcription support does not cover pricing discussions or make recommendations based on Microsoft Azure infrastructure.

Virtual Assistant transcription modes

Virtual Assistant works in two modes:

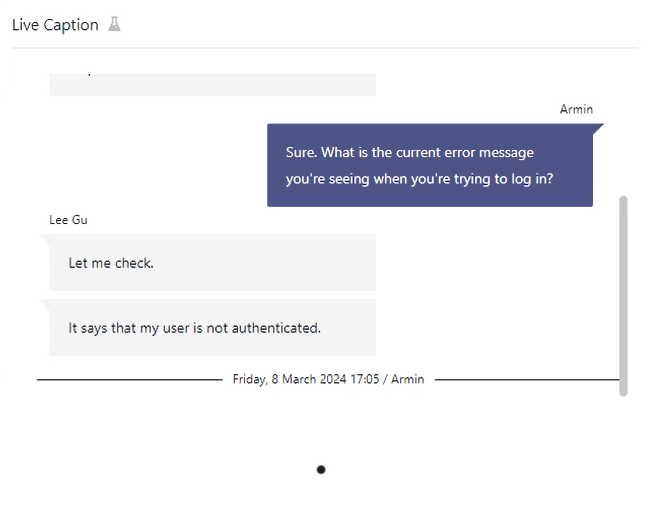

- Live Captioning is performed during the ongoing call and displayed on the My Sessions page.

- Voice Transcription is triggered when the call ends using the Nimbus Power Automate Connector. You can write the outputs to any target, e.g. into a Teams Message or Mail. → more on this below.

🔎This feature supports various regions as specified by Microsoft AI services. Allows to detect and support up to 4 languages per API configuration.

Voice Transcription

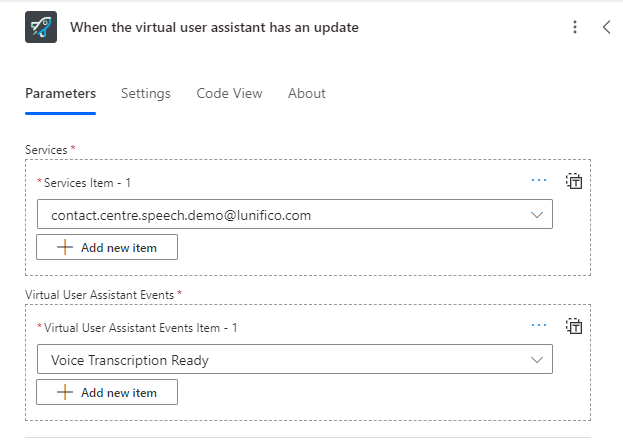

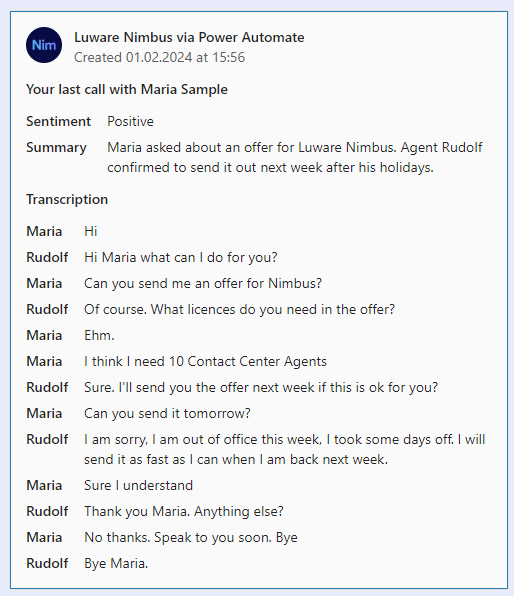

Once a call has ended, a voice transcription from Microsoft is generated. You can show this in My Sessions to your users or retrieve the transcribed text via Nimbus Power Automate Connector by leveraging the Trigger Event "When the virtual user assistant has an update".

|

|

Related Concepts and Steps

✅ After setting up a Speech Recognizer in the Configuration you can select it here. Refer to Use Case - Setting Up Transcription for detailed instructions.

✅ Follow-up steps:

- You can start using the Nimbus Power Automate Connector by leveraging Trigger Events and Flow Actions, as described in our example Use Case - Analyzing a Transcript.

- Virtual Assistant “Voice Transcription” feature is also used to show a Transcript widget with historical (concluded) sessions, enabled via Extensions Service Settings > Widgets.

Live Captioning

After enabling Live Captioning, a “Live Captioning” widget can be enabled in the Extensions Service Settings. Nimbus users will see this interaction as described on the Transcription feature page.

Related Concepts and Steps

✅ Enabling “Voice Transcription” and setting up a Speech Recognizer in the Configuration is mandatory to use "Live Captioning". This is necessary so that the right language engine is used for voice detection.

💡Good to know:

- When the feature is disabled or unavailable (e.g. throttling, missing settings or service impediments on Microsoft side) info messages are shown in the frontend UI widget. Nimbus task handling and user-customer interactions themselves are not affected by this and will continue normally.

- Only the transcription between the current User and the Customer is kept, no third parties. → Also refer to the Known Limitations chapter below.

✅ Follow-up steps:

- Live Captioning is also used to show a Live Caption widget with historical (concluded) sessions, enabled via Extensions Service Settings > Widgets.

Known Limitations

INC Transcription Limitations

KNOWN TRANSCRIPTION LIMITATIONS

Transcription is currently only supported for inbound calls. Outbound Calls (i.e. Call On Behalf ) will not be transcribed.

Supervision - by design (not a limitation): As long as Supervisors remain in Listen or Whisper mode during a conversation, their voice is not being transcribed. Only during ”Barge In" they are part of the conversation and transcription is active.