Enterprise Routing Contact Center This Tab is only available to Enterprise Routing and Contact Center services. The settings steer general feature availability and visibility to users. More details are described further below on this page.

Companion - Companion features are licensed and enabled per user (General User Settings). More details are described further below on this page.

The Companion Service Settings allow you to configure AI-driven features that help improve your overall service performance, for example by assisting your service users with AI-driven context, live captioning, or transcription functions.

Speech Recognizer

☝All Transcription or Live Captioning features described on this page requires prior setup of a Speech Recognizer in the Nimbus Configuration. This is necessary so that the right language engine is used for voice detection. Speech pre-configured Nimbus items that point to a 3rd party service for processing. A configured and working speech recognizer is the prerequisite to using any Companion features that involve voice-to-text capabilities.

Transcription

INC Public Preview Beta Feature

This feature is in PREVIEW and may not yet be available to all customers. Functionality, scope and design may change considerably.

INC Transcription Preconditions

PRECONDITIONS

✅Related Admin Use Case: Refer to Use Case - Setting Up Transcription for detailed step-by-step instructions.

Nimbus service and user licensing

🔎Transcription features have service and user requirements. These requirements apply for either mid-session Live Caption or post-session Transcription / Summarization features.

|

Service requirements Enterprise Routing Contact Center |

|

|

User requirements Companion |

|

Azure Speech Services

🔎Features described in the following use “Speech Services” hosted by Microsoft, supporting regions as specified by Microsoft AI services. The usage of the Transcription features and related APIs will cause additional monthly ACS costs outside of your Nimbus subscription.

Speech Services API Setup

- As an Administrator, you need to set up speech services in Azure and note down the API key. Read our Use Case - Setting Up a Speech Recognizer in Azure Portal and Nimbus for more details on this.

- The API key needs to be added in the Nimbus Speech Recognizer settings. The configured Speech Recognizer is then used for Transcription.

Optional: Power Automate connector integration

🔎Optional step: The Nimbus Power Automate Connector can extract Transcription/Summarization data for implementing additional use cases. To achieve this, the following steps need to be performed by an administrator:

- You need to set up a Power Automate flow that uses the “Companion” Trigger Event to react to any ongoing transcription session event.

- You then require the “Companion” Flow Action to capture the data for any further processing.

💡Some Use Case examples from our Knowledge Base:

INC Azure Billing Transcription

AZURE BILLING

The usage of the Transcription feature will cause additional monthly ACS costs. The costs are determined by Microsoft. Also see https://azure.microsoft.com/en-us/pricing/details/cognitive-services/speech-services/.

- Before enabling the Transcription feature, get in touch with your Luware Customer Success specialist to discuss terms, usage scenarios and necessary setup procedures.

- Please note that Nimbus and Transcription support does not cover pricing discussions or make recommendations based on Microsoft Azure infrastructure.

When active, the Transcription feature transcribes call contents using Voice-to-Text capabilities. It improves quality assurance and increases the efficiency of call processing, for example making it easier to summarize past customer interactions and possibly transfer them to a CRM system for easier search and later analysis.

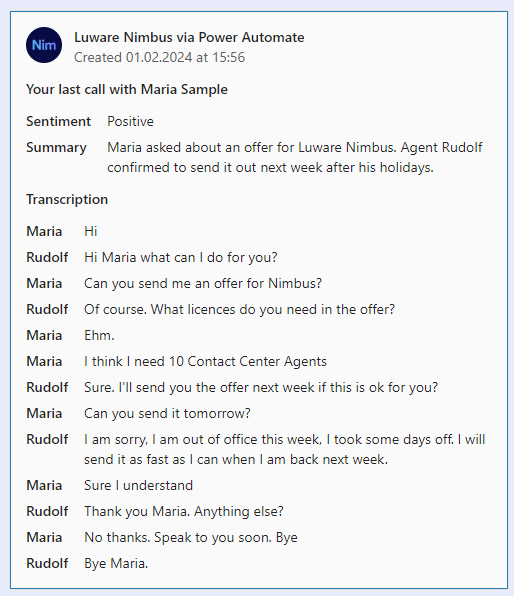

Once a call has ended, a Voice Transcription is generated in the background, by leveraging the Nimbus Power Automate Connector > “Companion” Trigger Events and Flow Actions. You can then forward the generated transcription to any target, e.g. by wrapping it into an Adaptive Card as Teams chat message or via Email.

|

|

Optionally you can also show the transcription in My Sessions, allowing users to have a direct written record of previous conversations with customers.

Summarization

Summarization

INC Public Preview Beta Feature

This feature is in PREVIEW and may not yet be available to all customers. Functionality, scope and design may change considerably.

INC Fair Use Disclaimer

🤝 Features and functionality described in the context of this page use runtime resources under a fair use policy. Luware may change the usage limit or contact customers that exceed the general usage quota.

✅ PRECONDITIONS

Companion - Summarization is an optional user feature:

- The Transcription feature must be enabled on the Companion Service Settings, as the Summarization is derived from it.

- The user needs a Companion License, applied via General User Settings.

The Nimbus Companion integrates AI summarization as part of a broader suite of Transcription features, aimed at improving service performance and agent efficiency. During a call, Nimbus transcribes the conversation in real time. After the call, the transcript is processed by an AI service to generate a summary with the following aspects:

| Aspect | Description |

|---|---|

| Title | A title generated from Call Direction, Service, and User that accepted the task. |

| Issue | Shortened problem description by the Customer. |

| Resolution | Summary of how the issue was resolved. |

| Recap | A brief paragraph summarizing the interaction. |

| Narrative | Detailed call notes or chat summary of the entire Customer/User Interaction. |

Summarization Outputs

Via My Sessions: Summarization outputs can be presented to the agent via My Sessions > Companion widget.

💡All aspects shown within the Companion Widget > Summarization can be hovered over and copied.

✅ Precondition: The “Companion” widget itself needs to be enabled in the Extensions Service Settings in order to be shown to users.

Via Nimbus Power Automate Connector: Administrators also have the possibility to flexibly retrieve and store Summarization outputs using Companion Flow Actions and the User/Service SessionID. This can be useful to keep a record of calls, e.g. within a CRM or long-term data storage and evaluation platform.

Known Limitations

Summarization relies on the Transcription feature and therefore shares the same design limitations.

INC Transcription Limitations

KNOWN TRANSCRIPTION & SUMMARIZATION LIMITATIONS

💡We are actively working on further improvements to the following limitations:

- Transcription needs to be enabled in order to use Live Captioning and Summarization. Speech Recognizers are required for all features.

- Transcription data is not part of service/user transfers. Data is kept within the current customer/user session.

- Transcription is currently only supported for direct inbound calls. Outbound Calls (i.e. Call On Behalf), and Consultation calls (either to user or Services) are not yet supported.

- All AI generated data (Transcription, Summarization) is currently stored for 30 days. Additional configuration options are planned for 2026.

DESIGN NOTES

- Feature availability - Transcription relies on external services and APIs. When the feature is disabled or unavailable (e.g. throttling, settings or Microsoft service impediments) info messages are shown in the frontend UI widget. Nimbus task handling and user-customer interactions themselves are not affected by this and will continue normally.

- Scope limitation: Only the transcription between the Nimbus user and the customer is kept. Third parties and call conference attendees are not part of the content transcribed.

- Supervision - As long as Supervisors remain in Listen or Whisper mode during a conversation, their voice is not being transcribed. Only during ”Barge In" they are part of the conversation and transcription is active.

- Summarization items generated from the Transcription might be missing when there is not enough data to draw from.

Codes Suggestion

INC Public Preview Beta Feature

This feature is in PREVIEW and may not yet be available to all customers. Functionality, scope and design may change considerably.

✅ Precondition: Transcription is required to be enabled and a Transcriber set in order to use Codes Suggestion.

The Codes Suggestion feature provides you suggestions in My Sessions after a call is finished. If you haven't added codes to the session yet, Companion automatically suggests them. In case you have already added your codes, you need to click on the suggest icon to see and apply code suggestions.

💡When configuring Codes in Configuration, you can add the context related to this code in the Companion Context field to support Nimbus Companion in suggesting codes.

Tags Suggestion

INC Public Preview Beta Feature

This feature is in PREVIEW and may not yet be available to all customers. Functionality, scope and design may change considerably.

✅ Precondition: Transcription is required to be enabled and a Transcriber set in order to use Tags Suggestion.

The Tags Suggestion feature provides you up to 5 tag suggestions in My Sessions from the list of already assigned tags (to service sessions). After a call is finished and you haven't added tags to the session yet, tags suggestions are shown. You can either select specific tags or apply them all. If you have already selected your own tags before, they don't get overwritten – in this case, you need to click on the suggest icon to see and apply tags suggestions.

Virtual Assistants

Live Captioning

✅ Precondition: Transcription is required to be enabled in order to use Live Caption.

The Live Caption feature converts ongoing call participant voices directly into text, using Voice-to-Text capabilities. Contents are displayed live on the My Sessions page.

Follow-Up Actions

✅ Service Owner follow-up action: For Transcription and Summarization features to be visible to your users, the “Companion” widget must be enabled via Extensions Service Settings > Widgets.

You can inform your Service team once the feature has been enabled, so they can pay attention in their My Sessions view accordingly.

✅Power Automate alternative for Companion data: You can also leverage the Nimbus Power Automate Connector by using “Companion” related Trigger Events and Flow Actions, as described in our example Use Case - Analyzing a Transcript. While adding complexity in configuration, the advantage is that you have more fine-tuned control over the transcription data, allowing you to process or store it externally, or opt to showcase it to additional users via the use of Adaptive Cards.

Known Limitations

INC Transcription Limitations

KNOWN TRANSCRIPTION & SUMMARIZATION LIMITATIONS

💡We are actively working on further improvements to the following limitations:

- Transcription needs to be enabled in order to use Live Captioning and Summarization. Speech Recognizers are required for all features.

- Transcription data is not part of service/user transfers. Data is kept within the current customer/user session.

- Transcription is currently only supported for direct inbound calls. Outbound Calls (i.e. Call On Behalf), and Consultation calls (either to user or Services) are not yet supported.

- All AI generated data (Transcription, Summarization) is currently stored for 30 days. Additional configuration options are planned for 2026.

DESIGN NOTES

- Feature availability - Transcription relies on external services and APIs. When the feature is disabled or unavailable (e.g. throttling, settings or Microsoft service impediments) info messages are shown in the frontend UI widget. Nimbus task handling and user-customer interactions themselves are not affected by this and will continue normally.

- Scope limitation: Only the transcription between the Nimbus user and the customer is kept. Third parties and call conference attendees are not part of the content transcribed.

- Supervision - As long as Supervisors remain in Listen or Whisper mode during a conversation, their voice is not being transcribed. Only during ”Barge In" they are part of the conversation and transcription is active.

- Summarization items generated from the Transcription might be missing when there is not enough data to draw from.