INC Public Preview Beta Feature

This feature is in PREVIEW and may not yet be available to all customers. Functionality, scope and design may change considerably.

The Transcription feature enables live captioning and voice transcription for calls. It uses Speech-to-Text (STT) technology for converting spoken words into written text. When enabled, the Transcription feature appears as a widget in My Sessions and within the Attendant Console sidebar.

INC Azure Billing Transcription

AZURE BILLING

The usage of the Transcription feature will cause additional monthly ACS costs. The costs are determined by Microsoft. Also see https://azure.microsoft.com/en-us/pricing/details/cognitive-services/speech-services/.

- Before enabling the Transcription feature, get in touch with your Luware Customer Success specialist to discuss terms, usage scenarios and necessary setup procedures.

- Please note that Nimbus and Transcription support does not cover pricing discussions or make recommendations based on Microsoft Azure infrastructure.

INC Transcription Preconditions

PRECONDITIONS

✅Related Admin Use Case: Refer to Use Case - Setting Up Transcription for detailed step-by-step instructions.

Nimbus service and user licensing

🔎Transcription features have service and user requirements. These requirements apply for either mid-session Live Caption or post-session Transcription / Summarization features.

|

Service requirements Enterprise Routing Contact Center |

|

|

User requirements Companion |

|

Azure Speech Services

🔎Features described in the following use “Speech Services” hosted by Microsoft, supporting regions as specified by Microsoft AI services. The usage of the Transcription features and related APIs will cause additional monthly ACS costs outside of your Nimbus subscription.

Speech Services API Setup

- As an Administrator, you need to set up speech services in Azure and note down the API key. Read our Use Case - Setting Up a Speech Recognizer in Azure Portal and Nimbus for more details on this.

- The API key needs to be added in the Nimbus Speech Recognizer settings. The configured Speech Recognizer is then used for Transcription.

Optional: Power Automate connector integration

🔎Optional step: The Nimbus Power Automate Connector can extract Transcription/Summarization data for implementing additional use cases. To achieve this, the following steps need to be performed by an administrator:

- You need to set up a Power Automate flow that uses the “Companion” Trigger Event to react to any ongoing transcription session event.

- You then require the “Companion” Flow Action to capture the data for any further processing.

💡Some Use Case examples from our Knowledge Base:

Live Caption

✅ Precondition: Transcription must be set up first to use Live Caption.

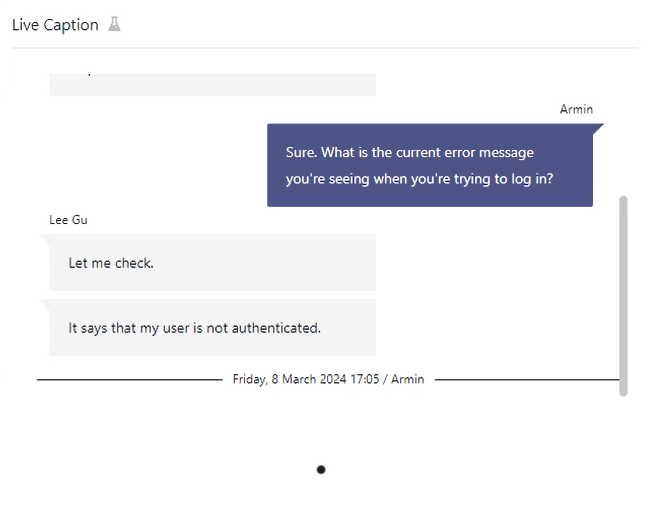

Live captions are audio transcriptions generated real-time during call sessions. When a call is accepted, a bot is invited to the call in order to start the audio transcription of the session. As a user, you see your spoken conversation with the caller in the Live Caption widget. Live Caption is only visible during ongoing calls, visible in either My Sessions as “Companion” widget and within the sidebar of Attendant Console .

|

|

Transcription Saving

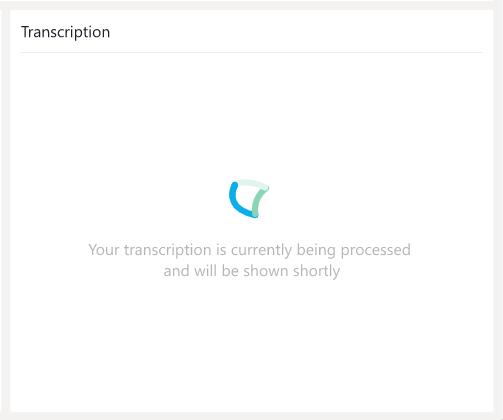

When the call session is ended, the live caption is saved as a transcript. If you have an ACW configured for the service, you get the following message while the transcript is being saved: "Your transcription is currently being processed and will be shown shortly".

⮑ Once the final transcription is processed, it appears in the Transcription widget.

💡Good to know:

- You can also access your prior session transcripts by clicking on a concluded session in My Sessions. If available, the transcript of the session is then opened in the “Companion” widget.

- Once enabled, Transcription is also available in Attendant Console within the sidebar.

- You can also opt-in to and show a Summarization of the transcript, configurable via Companion Service Settings.

- All data generated by Transcription can also be retrieved and handled by Nimbus Power Automate Connector via respective “Companion” Flow Actions and Trigger Events.

- If Companion transcription is configured on the service level and the agent has the required license, all incoming calls are transcribed. If you wish to offer opt-out from transcription, the call must be transferred to non-transcribed Nimbus service.

💡 We are working on improved behavior to allow more flexible opt-out feature in the future.

Troubleshooting

INC Transcription Troubleshooting

Not seeing any transcripts in the Transcript widget can have several causes. The following table lists error messages and explains why they are shown.

| Message | 🤔 Why do I see the message? |

|---|---|

| No transcription is available for this interaction. | There was no transcription generated for the selected conversation. |

|

No transcription is available for this interaction. Task was not accepted. |

The selected call was not accepted and therefore there is no transcribed conversation. |

|

Transcription not available. User Transcription License missing. |

The user doesn't have a Transcription License assigned. Transcription Licenses can be assigned to users in Users > General User Settings > Licenses. |

Known Limitations

INC Transcription Limitations

KNOWN TRANSCRIPTION & SUMMARIZATION LIMITATIONS

💡We are actively working on further improvements to the following limitations:

- Transcription needs to be enabled in order to use Live Captioning and Summarization. Speech Recognizers are required for all features.

- Transcription data is not part of service/user transfers. Data is kept within the current customer/user session.

- Transcription is currently only supported for direct inbound calls. Outbound Calls (i.e. Call On Behalf), and Consultation calls (either to user or Services) are not yet supported.

- All AI generated data (Transcription, Summarization) is currently stored for 30 days. Additional configuration options are planned for 2026.

DESIGN NOTES

- Feature availability - Transcription relies on external services and APIs. When the feature is disabled or unavailable (e.g. throttling, settings or Microsoft service impediments) info messages are shown in the frontend UI widget. Nimbus task handling and user-customer interactions themselves are not affected by this and will continue normally.

- Scope limitation: Only the transcription between the Nimbus user and the customer is kept. Third parties and call conference attendees are not part of the content transcribed.

- Supervision - As long as Supervisors remain in Listen or Whisper mode during a conversation, their voice is not being transcribed. Only during ”Barge In" they are part of the conversation and transcription is active.

- Summarization items generated from the Transcription might be missing when there is not enough data to draw from.